A Guided Tour of the 2mb Fork

Increasing the block size limit from 1 million bytes to 2 million bytes sounds so simple: just change the “1” to a “2” in the source code and we’re done, right?

If we didn’t care about a smooth upgrade, then it could be that simple. Just change this line of code (in src/consensus/consensus.h):

... MAX_BLOCK_SIZE=1000000

to:

... MAX_BLOCK_SIZE=2000000

If you make that one-byte change then recompile and run Bitcoin Core, it will work. You computer will download the blockchain and will interoperate with the other computers on the network with no issues.

If your computer is assembling transactions into blocks (you are a solo miner or a mining pool operator), then things get more complicated. I’ll walk through that complexity in the rest of this blog post, hopefully giving you a flavor for the time and care put into making sure consensus-level changes are safe.

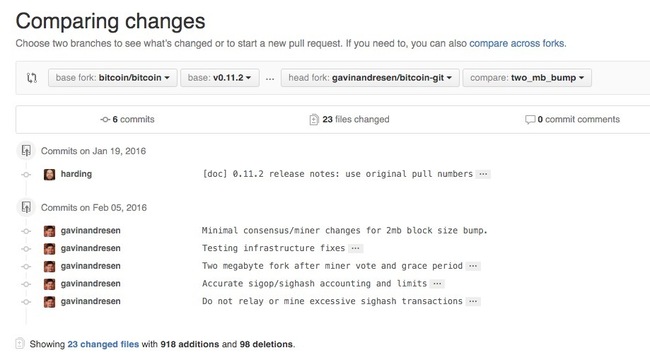

Github has a handy feature to compare code side-by-side; go to https://github.com/bitcoin/bitcoin/compare/v0.11.2…gavinandresen:two_mb_bump to see just the code changes for the two-megabyte fork. You should see something like this:

There are five “commits” – groups of code changes that go together– that implement the limit increase (ignore the first commit by David Harding, that’s just the last commit in the Bitcoin Core 0.11.2 release).

Twenty-two files changed, with about 900 new lines of code – more than half of which (500 lines) are new tests to make sure the new code works properly.

The first commit is “Minimal consensus/miner changes for 2mb block size bump”, and is small – under twenty lines of new code. You can see the one-line change of MAX_BLOCK_SIZE from 1,000,000 bytes to 2,000,000 bytes; the rest of the changes are needed by miners so they know whether it is safe to produce bigger blocks. A new MaxBlockSize() method is defined that returns either the old or new maximum block size, depending on the timestamp in the 80-byte block header. I might write an entire blog post about why it uses the timestamp instead of the block height or the median time of the last eleven blocks or something else… but not today.

The consensus change is on line 2816 of main.cpp in the CheckBlock() method, and just uses the new MaxBlockSize() method to decide whether or not a block is too big instead of MAX_BLOCK_SIZE. A similar change is made to the CreateNewBlock() function in miner.cpp and the ‘getblocktemplate’ remote procedure call so miners can create bigger blocks.

The next commit (“Testing infrastructure fixes”) adds a couple of features and fixes a bug in the code we use to test changes to the Bitcoin code.

There are two levels of code-testing-code; unit tests are put into the tree at src/test/, are written in C++, and very low level, testing to make sure various pieces of code behave properly. There are also regression tests in the tree at qa/rpc-tests/. They are written in Python and use the RPC (remote procedure call) interface to the command-line bitcoind running in “-regtest mode” to make sure everything is working properly.

“Two megabyte fork after miner vote and grace period” is by far the biggest of the commits– almost 700 lines of new code. It implements the roll-out rules: only allow bigger blocks 28 days after 75% of hashpower has shown support by producing blocks with a special bit set in the block version number.

75% and 28-days are fairly arbitrary choices. I hate arbitrary choices, mostly because it is so easy for everybody to have an opinion about them (also known as “bikeshedding”) and to spend days in unfruitful argument. I’ve written another blog post explaining why we think those are good numbers to choose.

The miner-vote-and-grace-period code comes from the BIP 101 implementation I did for Bitcoin XT, and has three levels of tests.

There is a new unit test in block_size_tests.cpp. It tests the CheckBlock() call, creating blocks that are exactly at the old or new size limits, or exactly one byte bigger than the old or new size limits, and testing to make sure they are or are not accepted based on whether their timestamps are before or after (or exactly at) the time when larger blocks are allowed.

There is also a new regression test, bigblocks.py. It runs four copies of bitcoind, creates a block-chain just for testing on the developer’s machine (in -regtest mode blocks can be created instantly) and then tests the fork activation code, making sure that miner votes are counted correctly, that blockchain re-organizations are handled properly, that bigger blocks will not be created until after the grace period is over. It also makes sure this code will report itself as obsolete if 75% of hashing power does not adopt the change before the January, 2018 deadline.

The vast majority of my development time was spent making sure that the regression and unit tests thoroughly exercised the new code. Then once the regression and unit tests pass, more testing is done on the test network across the Internet. Writing the code is the easy part.

And finally, this rollout code was extensively tested by Jonathan Toomim when he tested the eight megabyte blocks and Bitcoin XT on the testnet across the Internet (and across the great firewall of China).

Continuing the exploration of the code changes…

There are changes to the IsSuperMajority() function in main.cpp and a new VersionKnown() function in block.h that are used to count up the number of blocks that support various changes. The complexity here is to be compatible with BIP 009 (“version bits”) and various soft forks BIPs (68, 112, 113, 141) that might happen at the same time as the block size limit increase.

The largest number of new lines of code (besides tests) is to txdb.cpp. When the miner vote succeeds, the hash of the triggering block is written to the block chain index. That isn’t strictly necessary– the code could scan all the headers in the block chain to figure out if the vote succeeded every time it started up. It is just much more efficient to store that piece of information in the block chain index database.

Phew. Describing all that probably took longer than writing the code. Two more commits to go.

“Accurate sigop/sighash accounting and limits” is important, because without it, increasing the block size limit might be dangerous. You can watch my presentation at DevCore last November for the details, but basically Satoshi didn’t think hard enough about how transactions are signed, and as a result it is possible to create very large transactions that are very expensive to validate. This commit cleans up some of that “technical debt,” implementing a new ValidationCostTracker that keeps track of how much work is done to validate transactions and then uses it along with a new limit (MAX_BLOCK_SIGHASH) to make sure nobody can create a very expensive-to-validate block to try to gum up the network.

There are strong incentives for people not to create expensive-to-validate blocks (miners want their blocks to get across the network as quickly as possible, and work hard on that to minimize the ‘orphan rate’ for their blocks). But one of the principles of secure coding is “belts and suspenders” (“defense in depth” if you want to sound professional or don’t like suspenders).

MAX_BLOCK_SIGHASH is another annoying, arbitrary limit. It is set to 1.3 gigabytes, which is big enough so none of the blocks currently in the block chain would hit it, but small enough to make it impossible to create poison blocks that take minutes to validate.

The final commit, “Do not relay or mine excessive sighash transactions”, is another belt-and-suspenders safety measure. There were already limits in place to reject very large, expensive-to-validate transactions, but this commit adds another check to make absolutely sure a clever attacker can’t trick a miner into putting a crazy-expensive transaction into their blocks.

If you paid attention all the way to here, you have a longer attention span than me. For the non-programmers reading this, I hope I’ve given you some insight into the care and level of thought that goes into changing that “1” to a “2”.